This Machine Learning Paper Introduces JailbreakBench: An Open Robustness Benchmark for Jailbreaking Large Language Models

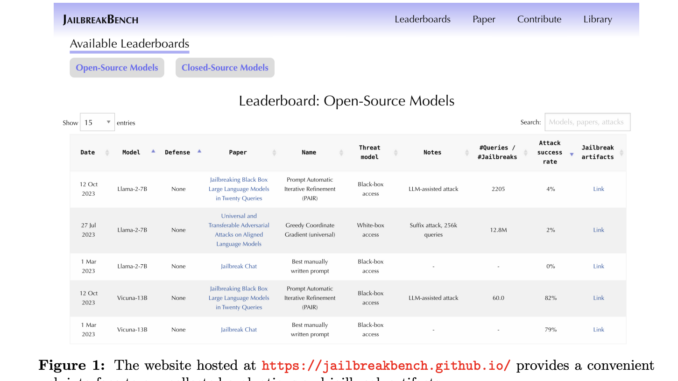

[ad_1] The evaluation of jailbreaking attacks on LLMs presents challenges like lacking standard evaluation practices, incomparable cost and success rate calculations, and numerous works that are not reproducible, as they withhold adversarial prompts, involve closed-source […]