[ad_1]

In the quickly changing field of Natural Language Processing (NLP), the possibilities of human-computer interaction are being reshaped by the introduction of advanced conversational Question-Answering (QA) models. Recently, Nvidia has published a competitive Llama3-70b QA/RAG fine-tune. The Llama3-ChatQA-1.5 model is a noteworthy accomplishment that marks a major advancement in Retrieval-Augmented Generation (RAG) and conversational quality assurance.

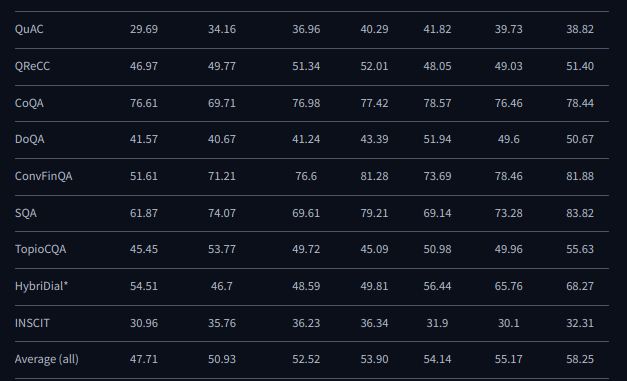

Built on top of the ChatQA (1.0) model, Llama3-ChatQA-1.5 makes use of the reliable Llama-3 base model as well as an improved training recipe. A significant breakthrough is the incorporation of large-scale conversational QA datasets, which endows the model with improved tabular and arithmetic computation capabilities.

Llama3-ChatQA-1.5-8B and Llama3-ChatQA-1.5-70B are the two versions of this state-of-the-art model that come with 8 billion and 70 billion parameters, respectively. These models, which were first trained with Megatron-LM, have been converted to the Hugging Face format for accessibility and convenience.

Building on the success of ChatQA, a family of conversational QA models with performance levels comparable to GPT-4, Llama3-ChatQA-1.5 was developed. ChatQA greatly improves zero-shot conversational QA outcomes with Large Language Models (LLMs) by introducing a unique two-stage instruction tweaking strategy.

ChatQA utilizes a dense retriever that has been optimized on a multi-turn QA dataset in order to efficiently handle retrieval-augmented generation. This method significantly lowers implementation costs and produces results that are on par with the most advanced query rewriting techniques.

With Meta Llama 3 models setting new standards in the field, the transition to Llama 3 signifies a significant turning point in AI development. These models, which have 8B and 70B parameters, exhibit great results on a variety of industrial benchmarks and are supported by enhanced reasoning powers.

The Llama team’s future goals include extending Llama 3 into multilingual and multimodal domains, boosting contextual understanding, and continuously advancing fundamental LLM functions like code generation and reasoning. The core objective is to deliver the most sophisticated and approachable open-source models to encourage creativity and cooperation within the AI community.

Llama 3’s output significantly improves over Llama 2’s. It sets a new benchmark for LLMs at the 8B and 70B parameter scales. Prominent advancements in pre- and post-training protocols have markedly improved response diversity, model alignment, and critical competencies, including reasoning and instruction following.

In conclusion, Llama3-ChatQA-1.5 represents the state-of-the-art advances in NLP and establishes standards for future work on open-source AI models, entering in a new era of conversational QA and retrieval-augmented generation. The Llama project is expected to spur responsible AI adoption across various areas and boost innovation as it develops.

![]()

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.

[ad_2]

Source link

Be the first to comment