[ad_1]

Computer vision often involves complex generative models and seeks to bridge the gap between textual semantics and visual representation. It offers myriad applications, from enhancing digital art creation to aiding in design processes. One of the primary challenges in this domain is the efficient generation of high-quality images that closely align with given textual prompts.

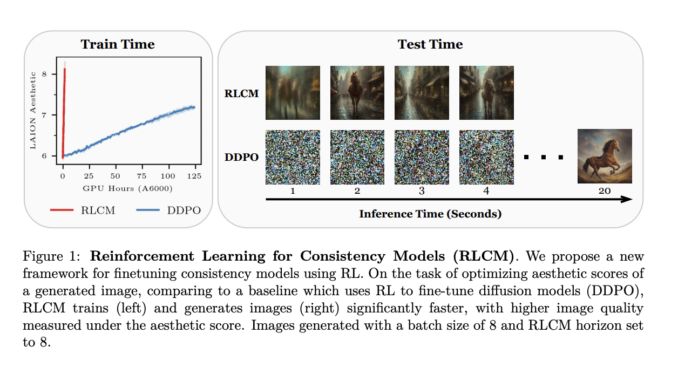

Existing research spans foundational diffusion models capable of producing high-quality, realistic images through a gradual noise reduction. Parallel developments in consistency models present a quicker method by directly mapping noise to data, enhancing the efficiency of image creation. The integration of reinforcement learning (RL) with diffusion models represents a significant innovation, treating the model’s inference as a decision-making process to refine image generation towards specific goals. Despite their advancements, these methods grapple with a common issue: a trade-off between generation quality and computational efficiency, often resulting in slow processing times that limit their practical application in real-time scenarios.

A team of researchers from Cornell University have introduced the Reinforcement Learning for Consistency Models (RLCM) framework, a novel intervention that distinctively accelerates text-to-image conversion processes. Unlike traditional approaches that rely on iterative refinement, RLCM utilizes RL to fine-tune consistency models, facilitating rapid image generation without sacrificing quality and a leap in efficiency and effectiveness in the domain.

The RLCM framework applies a policy gradient approach to fine-tune consistency models, specifically targeting the Dreamshaper v7 model for optimization. The methodology hinges on leveraging datasets like LAION for aesthetic assessments alongside a bespoke dataset designed to evaluate image compressibility and incompressibility tasks. Through this structured approach, RLCM efficiently adapts these models to generate high-quality images, optimizing for speed and fidelity to task-specific rewards. The process entails a calculated application of RL techniques to significantly reduce both training and inference times, ensuring the models’ effectiveness across varied image generation objectives without compromise.

Compared to traditional RL fine-tuned diffusion models, RLCM achieves a training speed that is up to 17 times faster. For image compressibility, RLCM managed to generate images with a 50% reduction in necessary inference steps, translating to a substantial decrease in processing time from initiation to output. On aesthetic evaluation tasks, RLCM improved reward scores by 30% compared to conventional methods. These results underscore RLCM’s capacity to deliver high-quality images efficiently, marking a substantial leap forward in the text-to-image generation domain.

To conclude, the research introduced the RLCM framework, a novel method that significantly accelerates the text-to-image generation process. By leveraging RL to fine-tune consistency models, RLCM achieves faster training and inference times while maintaining high image quality. The framework’s superior performance on various tasks, including aesthetic score optimization and image compressibility, showcases its potential to enhance the efficiency and applicability of generative models. This pivotal contribution offers a promising direction for future computer vision and artificial intelligence developments.

Check out the Paper and Project. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 40k+ ML SubReddit

![]()

Nikhil is an intern consultant at Marktechpost. He is pursuing an integrated dual degree in Materials at the Indian Institute of Technology, Kharagpur. Nikhil is an AI/ML enthusiast who is always researching applications in fields like biomaterials and biomedical science. With a strong background in Material Science, he is exploring new advancements and creating opportunities to contribute.

[ad_2]

Source link

Be the first to comment