[ad_1]

Data privacy is a major concern in today’s world, with many countries enacting laws like the EU’s General Data Protection Regulation (GDPR) to protect personal information. In the field of machine learning, a key issue arises when clients wish to leverage pre-trained models by transferring them to their data. Sharing extracted data features with model providers can potentially expose sensitive client information through feature inversion attacks.

Previous approaches to privacy-preserving transfer learning have relied on techniques like secure multi-party computation (SMPC), differential privacy (DP), and homomorphic encryption (HE). While SMPC requires significant communication overhead and DP can reduce accuracy, HE-based methods have shown promise but suffer from computational challenges.

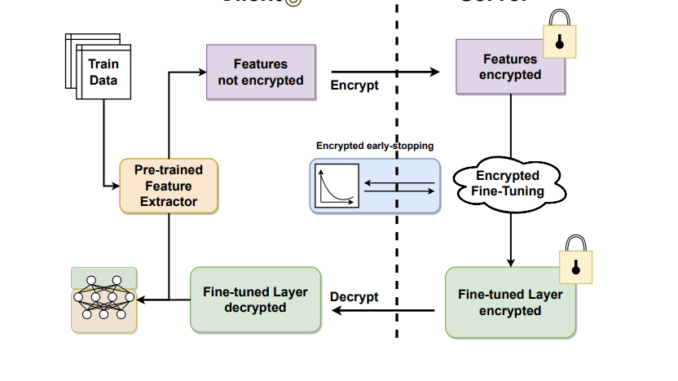

A team of researchers has now developed HETAL, an efficient HE-based algorithm (shown in Figure 1) for privacy-preserving transfer learning. Their method allows clients to encrypt data features and send them to a server for fine-tuning without compromising data privacy.

At the core of HETAL is an optimized process for encrypted matrix multiplications, a dominant operation in neural network training. The researchers propose novel algorithms, DiagABT and DiagATB, that significantly reduce the computational costs compared to previous methods. Additionally, HETAL introduces a new approximation algorithm for the softmax function, a critical component in neural networks. Unlike prior approaches with limited approximation ranges, HETAL’s algorithm can handle input values spanning exponentially large intervals, enabling accurate training over many epochs.

The researchers demonstrated HETAL’s effectiveness through experiments on five benchmark datasets, including MNIST, CIFAR-10, and DermaMNIST (results shown in Table 1). Their encrypted models achieved accuracy within 0.51% of their unencrypted counterparts while maintaining practical runtimes, often under an hour.

HETAL addresses a crucial challenge in privacy-preserving machine learning by enabling efficient, encrypted transfer learning. The proposed method protects client data privacy through homomorphic encryption while allowing model fine-tuning on the server side. Moreover, HETAL’s novel matrix multiplication algorithms and softmax approximation technique can potentially benefit other applications involving neural networks and encrypted computations. While limitations may exist, this work represents a significant step towards practical, privacy-preserving solutions for machine learning as a service.

Check out the Paper and Github. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

![]()

Vineet Kumar is a consulting intern at MarktechPost. He is currently pursuing his BS from the Indian Institute of Technology(IIT), Kanpur. He is a Machine Learning enthusiast. He is passionate about research and the latest advancements in Deep Learning, Computer Vision, and related fields.

[ad_2]

Source link

Be the first to comment