[ad_1]

SLAM (Simultaneous Localization and Mapping) is one of the important techniques used in robotics and computer vision. It helps machines understand where they are and create a map of their surroundings. Motion-blurred images face difficulties in dense visual SLAM systems for two reasons: 1) Inaccurate pose estimation during tracking: Current photo-realistic dense visual SLAM algorithms rely on clear images to estimate camera positions by ensuring consistent brightness across views. This affects the mapping process, leading to inconsistent multi-view geometry. 2) Inconsistent multi-view geometry in mapping: Poor image quality from various views may lead to incorrect features, which cause errors in 3D geometry and a low-quality reconstruction of the 3D map. Combining these two factors, existing dense virtual SLAM systems would usually perform poorly when handling motion-blurred images.

Traditional sparse SLAM methods use sparse point clouds for map reconstruction. Recent learning-based dense SLAM systems focus on generating dense maps important for downstream tasks. Neural Radiance Fields (NeRF) and 3D Gaussian Splatting (3DGS) have been used with SLAM systems to create realistic 3D scenes, improving map quality and texture. However, existing methods heavily rely on high-quality, sharp RGB-D inputs, which pose challenges when dealing with motion-blurred frames, often encountered in low-light or long-exposure conditions, which result in low precision and efficiency of localization and mapping in various methods.

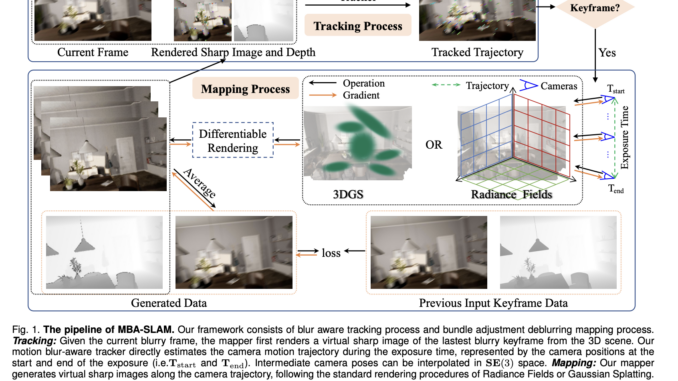

To solve these problems, a group of researchers from China conducted detailed research and proposed MBA-SLAM, a photo-realistic dense RGB-D SLAM pipeline designed to handle motion-blurred inputs effectively. This approach integrates the physical motion blur imaging process into the tracking and mapping stages. The main objective of this framework is to reconstruct high-quality, dense 3D scenes and accurately measure camera motion trajectories, which was achieved by integrating two key components: a motion blur-aware tracker and a bundle-adjusted deblur mapper based on NeRF or 3D Gaussian Splatting.

The method used a continuous motion model to track the camera’s movement during exposure. The system considered the camera’s start and end positions for each motion-blurred image. In tracking, a sharp reference image was rendered, blurred to match the current image, and compared to improve the motion estimate. The camera trajectories and 3D scenes were optimized in mapping to reduce image-matching errors. Two scene representations were explored: implicit neural radiance fields (NeRF) and explicit 3D Gaussian Splatting (3D-GS). NeRF achieved higher frame rates but lower rendering quality, while 3D-GS offered better quality at the cost of lower frame rates.

The method showed a measure reduction in tracking errors, with the ScanNet dataset yielding an ATE RMSE of 0.053, outperforming ORB-SLAM3 (0.081) and LDS-SLAM (0.071). On the TUM RGB-D dataset, MBA-SLAM achieved an ATE RMSE of 0.062, showing its superior tracking precision. In image reconstruction, MBA-SLAM excelled with a PSNR of 31.2 dB on the ArchViz dataset and an SSIM of 0.96 on ScanNet, outperforming methods like ORB-SLAM3 and DSO in terms of quality. The LPIPS score of MBA-SLAM is also reported to be 0.18, which reflects better perceptual quality. Radiance fields and Gaussian splatting improved image quality, while CUDA acceleration enabled real-time processing, making it 5 times faster than others. MBA SLAM provided improved accuracy in tracking, better image quality, and speed compared to others, and it seemed to promise an application in SLAM scenarios with motion blur due to dynamism in the environment.

In summary, the proposed framework MBA-SLAM effectively addresses problems in the SLAM system. With its physical motion blur image formation model, highly CUDA-optimized blur-aware tracker, and deblurring mapper, the MBA-SLAM tracked accurate camera motion trajectories within exposure time and reconstructed a sharp and photo-realistic map for the given video sequence input. It performed much better than the previous methods on existing and real-world datasets. This work marks a significant development in the field of SLAM systems and can be used as a baseline for future advancement and research!

Check out the Paper and GitHub. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter and join our Telegram Channel and LinkedIn Group. If you like our work, you will love our newsletter.. Don’t Forget to join our 55k+ ML SubReddit.

🎙️ 🚨 ‘Evaluation of Large Language Model Vulnerabilities: A Comparative Analysis of Red Teaming Techniques’ Read the Full Report (Promoted)

Divyesh is a consulting intern at Marktechpost. He is pursuing a BTech in Agricultural and Food Engineering from the Indian Institute of Technology, Kharagpur. He is a Data Science and Machine learning enthusiast who wants to integrate these leading technologies into the agricultural domain and solve challenges.

[ad_2]

Source link

Be the first to comment