[ad_1]

Natural Language Processing (NLP) has taken over the field of Artificial Intelligence (AI) with the introduction of Large Language Models (LLMs) such as OpenAI’s GPT-4. These models use massive training on large datasets to predict the next word in a sequence, and they improve with human feedback. These models have demonstrated potential for use in biomedical research and healthcare applications by performing well on a variety of tasks, including summarization and question-answering.

Specialized models, such as Med-PaLM 2, have greatly influenced fields such as healthcare and biomedical research by enabling activities like radiological report interpretation, clinical information analysis from electronic health records, and information retrieval from biomedical literature. Improving domain-specific language models can lead to lower healthcare costs, faster biological discovery, and better patient outcomes.

However, LLMs still face several obstacles despite their impressive performance. Over time, the expenses related to the training and application of these models have increased significantly, raising both financial and environmental issues. Also, the closed nature of these models, which are run by large digital companies, raises concerns about accessibility and data privacy.

In the biomedical field, the closed structure of these models prevents additional fine-tuning for particular needs. Though they provide domain-specific answers, models such as PubMedBERT, SciBERT, and BioBERT are modest compared to broader models such as GPT-4.

In order to address these issues, a team of researchers from Stanford University and DataBricks has developed and released BioMedLM, a GPT-style autoregressive model with 2.7 billion parameters. BioMedLM outperforms generic English models in multiple benchmarks and achieves competitive performance in biomedical question-answering tasks.

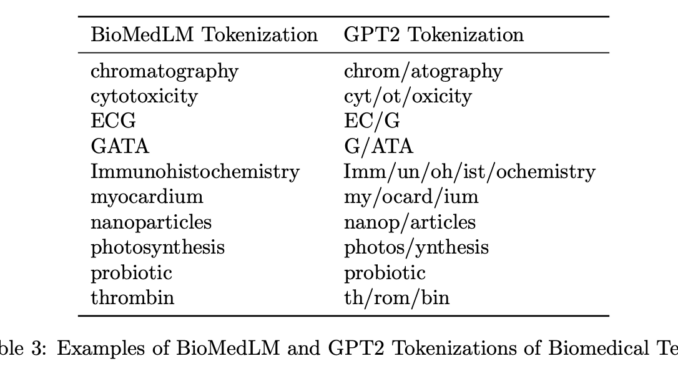

To provide a targeted and carefully selected corpus for Biomedical NLP tasks, BioMedLM only uses training data from PubMed abstracts and full articles. When optimized for certain biomedical applications, BioMedLM performs robustly even if it is smaller in scale than larger models.

Evaluations have shown that BioMedLM can do well on multiple-choice biomedical question-answering tasks. It can achieve competitive results that are on par with larger models. Its performance in extracting pertinent information from biological texts has been demonstrated by its scores of 69.0% on the MMLU Medical Genetics test and 57.3% on the MedMCQA (dev) dataset.

The team has shared that BioMedLM can be improved even further to produce insightful answers to patient inquiries about medical subjects. This adaptability highlights how smaller models, such as BioMedLM, can function as effective, transparent, and privacy-preserving solutions for specialized NLP applications, especially in the biomedical field.

As a more compact option that requires less computational overhead for training and deployment, BioMedLM offers benefits in terms of resource efficiency and environmental impact. Its dependence on a hand-picked dataset also improves openness and reliability, resolving issues with training data sources’ opacity.

Check out the Paper and Model. All credit for this research goes to the researchers of this project. Also, don’t forget to follow us on Twitter. Join our Telegram Channel, Discord Channel, and LinkedIn Group.

If you like our work, you will love our newsletter..

Don’t Forget to join our 39k+ ML SubReddit

![]()

Tanya Malhotra is a final year undergrad from the University of Petroleum & Energy Studies, Dehradun, pursuing BTech in Computer Science Engineering with a specialization in Artificial Intelligence and Machine Learning.She is a Data Science enthusiast with good analytical and critical thinking, along with an ardent interest in acquiring new skills, leading groups, and managing work in an organized manner.

[ad_2]

Source link

Be the first to comment