[ad_1]

There are symmetries everywhere. The universal principles of physics hold in both space and time. They exhibit symmetry when spatial coordinates are translated, rotated, and shifted in time. Additionally, the system is symmetric about a permutation of the labels if several similar or equivalent items are labeled with numbers. Embodied agents encounter this structure, and many everyday robotic activities display temporal, spatial, or permutation symmetries. A quadruped’s gaits are independent of its direction of motion; similarly, a robotic grasper might engage with several identical items without regard to their labels. However, this rich structure needs to be taken into consideration by most planning and reinforcement learning (RL) algorithms.

Even while they have shown impressive results on well-defined issues after receiving enough training, they frequently exhibit sampling inefficiency and lack resilience to environmental changes. The study team feels that it is critical to create RL algorithms with an understanding of their symmetries to increase their sample efficiency and resilience. These algorithms ought to meet two important requirements. Initially, the world and policy models need to be equivariant about the pertinent symmetry group. This is often a subgroup of discrete time shifts Z, the product group of the spatial symmetry group SE(3), and one or more object permutation groups Sn for embodied agents. Secondly, to accomplish actual problems, gently breaking (parts of) the symmetry group should be feasible. To move an object to a specified location in space that breaks the symmetry group SE(3) may be the goal of a robotic gripper. The first efforts on equivariant RL have revealed the potential advantages of this technique. Nevertheless, these works often only consider tiny finite symmetry groups, like Cn, and they typically do not permit soft symmetry breakdown depending on the job at hand during testing.

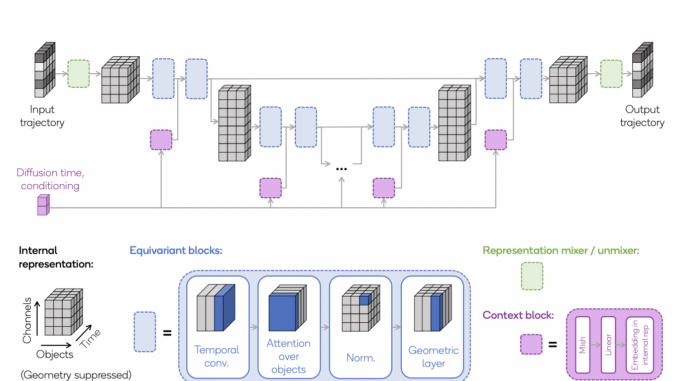

In this study, the research team from Qualcomm presents an equivariant method for model-based reinforcement learning and planning called the Equivariant Diffuser for Generating Interactions (EDGI). The foundational element of EDGI is equivariant about the entire product group SE(3) × Z × Sn, and it accommodates the many representations of this group that the research team anticipates coming across in embodied contexts. Furthermore, depending on the job, EDGI permits a flexible soft symmetry breakdown at test time. Their methodology is based on the Diffuser method previously proposed by researchers, who treat the challenge of generative modeling in both learning a dynamics model and planning inside it. Diffuser’s main concept is training a diffusion model on an offline dataset of state-action trajectories. Using classifier guidance to optimize reward, one sample from this model is conditionally on the present state to plan. Their principal contribution is a novel diffusion model allowing multi-representation data and equivariant about the product group SE(3) × Z × Sn of spatial, temporal, and permutation symmetries.

The research team presents innovative temporal, object, and permutation layers that act on individual symmetries and a novel method of embedding numerous input representations into a single internal representation. Their method, when combined with classifier guiding and conditioning, enables a gentle breaking of the symmetry group through test-time task requirements when included in a planning algorithm. The study team uses robotic item handling and 3D navigation settings to show EDGI objectively. Using an order of magnitude less training data, the study team finds that EDGI significantly increases performance in the low-data domain, matching the performance of the best non-equivariant baseline. Furthermore, EDGI generalizes effectively to previously undiscovered configurations and is noticeably more resilient to symmetry changes in the environment.

Check out the Paper. All credit for this research goes to the researchers of this project. Also, don’t forget to join our 33k+ ML SubReddit, 41k+ Facebook Community, Discord Channel, and Email Newsletter, where we share the latest AI research news, cool AI projects, and more.

If you like our work, you will love our newsletter..

![]()

Aneesh Tickoo is a consulting intern at MarktechPost. He is currently pursuing his undergraduate degree in Data Science and Artificial Intelligence from the Indian Institute of Technology(IIT), Bhilai. He spends most of his time working on projects aimed at harnessing the power of machine learning. His research interest is image processing and is passionate about building solutions around it. He loves to connect with people and collaborate on interesting projects.

[ad_2]

Source link

Be the first to comment