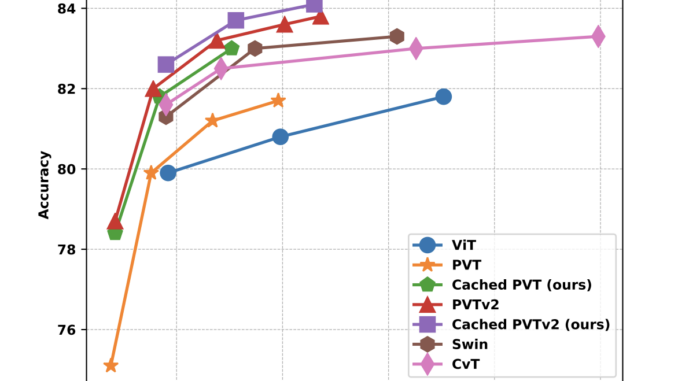

This AI Paper Unveils the Cached Transformer: A Transformer Model with GRC (Gated Recurrent Cached) Attention for Enhanced Language and Vision Tasks

[ad_1] Transformer models are crucial in machine learning for language and vision processing tasks. Transformers, renowned for their effectiveness in sequential data handling, play a pivotal role in natural language processing and computer vision. They […]